Introduction

If extraterrestrial astronomers or space travelers were to zoom a telescope to view present-day planet Earth, he/she/they might wonder at the strange appendages that many humans seems to have attached to their hands and ears… Yes, iPhones, Androids and now even smart watches have taken society by storm, for better or worse. Ditto for driverless cars, smart homes, Facebook, Snapchat, online banking, streaming movies, international video calling and a host of other modern conveniences and tools.

All of these wonders are made possible by “Moore’s Law,” that unwritten “law” that semiconductor technology advances roughly by a factor of two every 18 months or so. Moore’s Law has its roots in a 1965 article by Intel founder’s Gordon Moore. He observed,

The complexity for minimum component costs has increased at a rate of roughly a factor of two per year. … Certainly over the short term this rate can be expected to continue, if not to increase. Over the longer term, the rate of increase is a bit more uncertain, although there is no reason to believe it will not remain nearly constant for at least 10 years. That means by 1975, the number of components per integrated circuit for minimum cost will be 65,000.

Moore noted semiconductor density was doubling every 12 months, but this rate was later modified to roughly 18 months. In any event, Moore’s Law has now continued unabated for 50 years, or an advance of a factor of roughly 233 or 8 billion! That means memory chips with 8 billion times as much data as in 1965, and, overall, computer hardware that is 8 billion times as powerful. Moore was originally embarrassed by the eponymous law. But on the 40th anniversary, Intel was happy to celebrate it and Moore had commented that it seemed still to be accurate.

This sustained exponential rate of progress is without peer in the history of human technology. Here is a graph of Moore’s Law, shown with the transistor count of various computer processors (courtesy Wikimedia):

Present status of Moore’s Law

Will Moore’s Law continue its torrid pace of advance? By one measure, namely processor clock rate, Moore’s Law has already mostly stalled. Today’s state-of-the-art production microprocessors typically have 3.0 GHz clock rates, compared with 2.0 GHz rates five or ten years ago — not a big improvement (although the processors in certain mainframes have clock rates of 5.0 GHz or more). But the industry has simply increased the number of processor “cores” and on-chip cache memory, so that aggregate performance continues to track or exceed Moore’s Law projections.

The capacity of leading-edge DRAM main memory chips continues to advance apace with Moore’s Law. Chips containing 64 Gbyte are currently in production. Memory chips with a 3-D design are being developed jointly by IBM and Micron Technology.

On July 9, 2015, IBM announced that it had produced working prototypes of chips with 7 nanometer features, or, in other words, containing four times the number of transistors as today’s 14 nanometer chips. By comparison, a helical strand of DNA is 2.5 nanometers in diameter — thus commercial semiconductor technology is now approaching the molecular and atomic realm.

Will Moore’s Law continue?

So what are the prospects for the future. Can the tech industry keep Moore’s Law on track? How much longer?

Hewlett Packard Laboratories has been hard at work developing new approaches for microelectronics. HP’s nanotechnology research group has developed a “crossbar architecture,” namely a design where a set of parallel “wires” a few nanometers in width are crossed by a second set of “wires” at right angles. Where the “wires” intersect forms an electronic switch, which can be configured for either logic or memory storage use. They are also investigation nanoscale photonics (light-based devices), which can be deployed either for conventional electronic devices or for emerging quantum computing devices.

At the present time, some of the most promising bets to extend Moore’s Law involve carbon nanotubes — submicroscopic tubes of carbon atoms that have remarkable properties, such as being able to change from conductors to semiconductors (which can be switched) to insulators. For example, Stanford researchers have recently constructed a prototype computer constructed from carbon nanotubes. A key breakthrough here is to develop an “imperfection-immune” design, or, in other words, to restrict a device to only those types of nanotubes that have the desired properties. According to Georges Gielen of Katholieke Universiteit in Leuven, Belgium,

<blockquote[Carbon nanotubes] are an emerging technology that offer the potential to cut down significantly, by orders of magnitude, on the power consumption of electronics compared to today’s state-of-the-art technologies. If this materializes, this will be a major breakthrough for all the mentioned and future applications.

In a 2012 article in the Conversation on the future of Moore’s law we wrote:

It is not a big leap to imagine that within the next ten years tailored and massively more powerful versions of Siri (Apple’s new iPhone assistant) will be an integral part of mathematics, not to mention medicine, law and just about every other part of human life.

In 2015 we can see traces of this integration everywhere.

Moore’s Law in science and mathematics

So what does this mean for researchers in science and mathematics?.

It means plenty. A scientific laboratory typically uses hundreds of high-precision devices that rely crucially on electronic designs. Thus with each step of Moore’s Law, this equipment becomes ever cheaper and more powerful. Perhaps the most dramatic example is DNA sequencing systems. When scientists first completed sequencing a human genome in 2001, at a cost of several hundred million U.S. dollars, observers were jubilant at the advances in equipment that had made this achievement possible. Today, only 14 years later, some commercial firms are producing systems that permit a complete human genome to be produced for only about $1000, and prices may be heading even lower. This jaw-dropping development is illustrated with this graph (courtesy the National Human Genome Research Institute):

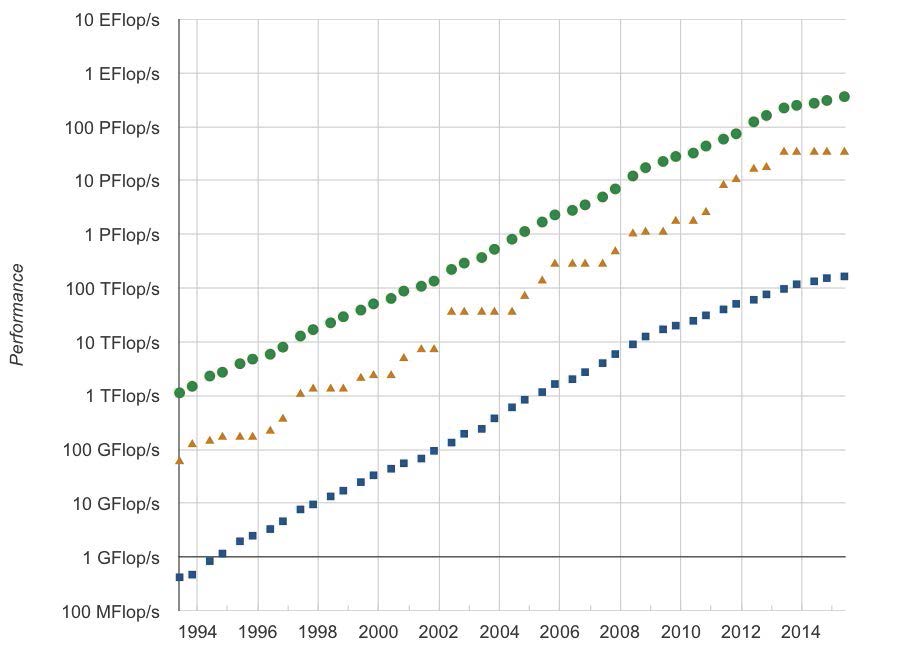

Here is a graph of the Linpack performance of world’s leading-edge system over the time period 1993-2011 (data courtesy Top 500 site). Note that over this 18-year period, the performance of the world’s #1 system has advanced more than five orders of magnitude. The current #1 system is more powerful than the sum of the world’s top 500 supercomputers just four years ago.

Pure mathematicians have been a relative latecomer to the world of high-performance computing. The present authors recall the time, not so long ago, when “real mathematicians don’t compute.” But thanks to a new generation of mathematical software tools, not to mention the ingenuity of thousands of young, computer-savvy mathematicians worldwide, remarkable progress has been achieved in this arena as well.

For example, in 1963 Daniel Shanks, who himself had calculated pi to 100,000 digits, declared that computing one billion digits would be “forever impossible.” Yet this level was reached in 1989. In 1989, famous British physicist Roger Penrose, in the first edition of his best-selling book The Emperor’s New Mind, declared that humankind likely will never know if a string of ten consecutive sevens occurs in the decimal expansion of pi. Yet this was found just eight years later, in 1997. The latest computation of pi produced over 12 trillion decimal digits.

Computers are certainly being used for more than just computing and analyzing digits of pi. For example, in 2003 the American mathematician Thomas Hales completed a computer-based proof of Kepler’s conjecture, namely the long-hypothesized fact that the simple way the grocer stacks oranges is in fact the optimal packing for equal-diameter spheres. Recently he completed a computer-verified formal proof of this fact. Computations also played a key role in the recent proof of a result on the gaps between consecutive prime numbers. Many other examples could be cited. Indeed, intense computation now pervades all fields of mathematical research.

Future prospects

What does the future hold? Assuming that Moore’s Law continues unabated at approximately the same rate as the present, and that obstacles in areas such as power management and system software can be overcome, we will see, by the year 2025, large-scale supercomputers that are 100 times more powerful and capacious than today’s state-of-the-art systems — “exaflops” computers. Mathematicians and researchers from many fields eagerly await these systems for calculations, such as advanced climate models, that cannot be done on today’s systems.

Such futuristic hardware, when combined with machine intelligence, such as a variation of the Watson system that defeated the top human contestants in the North American TV game show Jeopardy!, may yield systems inconceivably more powerful and useful than today’s systems. IBM is currently adapting its Watson system for work in cancer research. One medical researcher working on the development team notes, wistfully, that “[Watson] always seems to have the best answers.”

Some observers, such as those in the Singularity movement, are even more expansive, predicting a time just a few decades hence when technology will advance so fast that at the present time we cannot possibly conceive or predict the outcome. Even if more conservative predictions are realized, it is clear that the digital future looks very bright, if daunting. We may well look back at the present day with the same technological disdain with which we currently view the 1960s when Moore’s Law was first conceived. As Richard Feynman put it in 1959 there still appears to be plenty of room at the bottom.

[Note: A version of this article has appeared in The Conversation.]